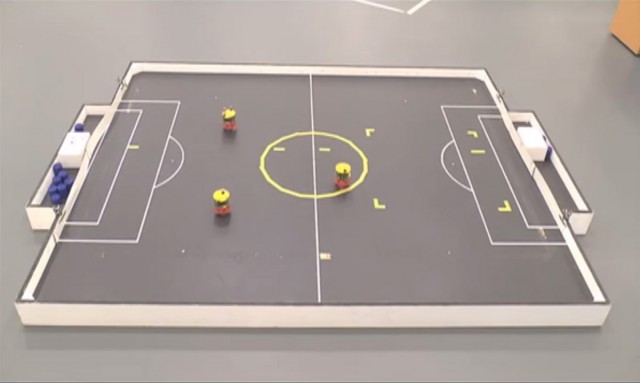

Roboticist Alan Winfield works at Bristol Robotics Laboratory. With his team, he has developed a program that has made the robot kind. This robot prevents the other robots from falling into a hole. The robot follows the first law of robotics coined by Isaac Asimov in his literary works dedicated to robots: “A robot may not injure another human being or, through inaction, allow that another human being exposed to danger.”

The robot had to perform a simple task: rescue other machine and prevent them from falling into the hole by changing their trajectory. The result was a success. As soon as it detected that another robot was going to fall into a hole, it moved forward at full speed to change the trajectory of its colleague. Then the researchers repeated the experiments by placing two robots to be rescued at the the same time. It turned out that 14 times out of 33 times, the robot failed to provide any assistance and letting the two other robots fall into the hole. Rest of the times, when it took the decision to save one, he left the other to its fate. But in some rare cases, he smanaged to place himself in a way that prevented both of the two robots to fall into the hole.

Alan talks about a phenomenon he calls “zombie ethics.”The robot behaves as a savior as much it can. It is programmed to bring relief to specific entities, adopting a pre-programmed technique, but it does the job without first understanding the reasons for these actions. Before conducting this experiment, Alan was convinced that machines could not make a choice by following a defined ethics (here its to prevent someone from falling). Now, it may well be possible, after all.”

One thing is certain, if robots can be programmed to have the power of free will, it could change a lots of things. Since they are playing an increasingly important role in our daily life, it is an important topic to discuss. For example, if you are sitting in an autonomous car and suddenly a pdestrian comes in the way. Then the robot has to decide whose security is more important passenger’s or pedestrian’s?. A complex dilemma indeed.

To answer these questions, robots are already being tested at the Georgia Tech Institute of Atlanta or in the research department of the United States Navy. Ronald Arkin, a computer scientist, develops algorithms for machines that have been dubbed as “ethical governors” and help humans make good decisions when they are in combat (currently in simulation stage only). The most important factor considered is the minimization of casualties.

According to Ronald, it is easy to program robots to meet military laws because they are very well known and written. On the other hand, a man who has emotions, will be more likely to be disturbed by the situation, which may lead him making the wrong decision. Research on the ethics of robots is its infancy but progressing. If one day, manufacturers are able to program machine for the unpredictable and complex environments, a huge field of applications will open to them.

[youtube]http://www.youtube.com/watch?v=jCZDyqcxwlo[/youtube]

We are delighted to learn that robots can make decisions for themselves. We can already imagine that machines are involved in the tasks that require extreme and foolproof concentration for long periods. In the future, why not firefighters may include robots or fully autonomous planes that can themselves avoid thunderstorms? Of course, this worries us too. We cannot simply entrust our lives to the machines without human supervision. Do you think that in the future robots could make critical decisions?